When you’re working with cloud-based applications, finding ways to make workflows efficient and affordable is key—especially when you’re dealing with high-volume tasks. That’s where AWS Express Step Functions can be a game-changer. Unlike standard Step Functions, Express Step Functions are optimized for speed and cost-efficiency, making them perfect for short-duration, high-frequency workflows. They allow you to run tasks at scale without paying for resources you don’t need, helping you save on both time and budget.

Whether you’re processing data in real-time, automating ETL jobs, or orchestrating microservices, Express Step Functions can simplify complex workflows while keeping costs in check. In this guide, we’ll look at how AWS Express Step Functions work, why they’re an ideal solution for cost-effective workflows, and how you can start using them to streamline your operations without breaking the bank.

History: AWS Express Step Function for cost optimization came into picture when I was working on a project where each file

needed to go through multiple steps,

with every step relying on the previous one.

We decided to use AWS Step Functions for a seamless workflow.

However, as the number of files increased, the cost of

Step Functions soared to $25k per month. We need to do cost optimisation

Solution: We have started using Express step function and cost was reduced to 80%.

Challenges: AWS Express Step Functions come with a 5-minute timeout limit for each execution.

As the size of our files grew, our processing began to exceed this limit, leading to timeouts.

Unfortunately, the boto3 API does not offer a solution for this issue..

How to overcome the challenges: We have use Cloudwatch log Subscription filter to stream the log to kinesis only when there is timeout happened.

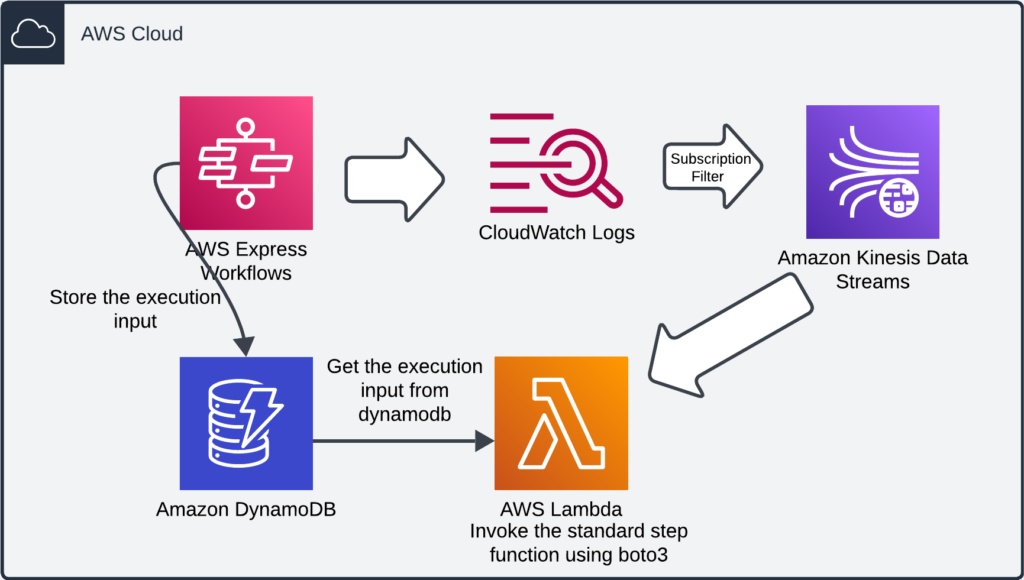

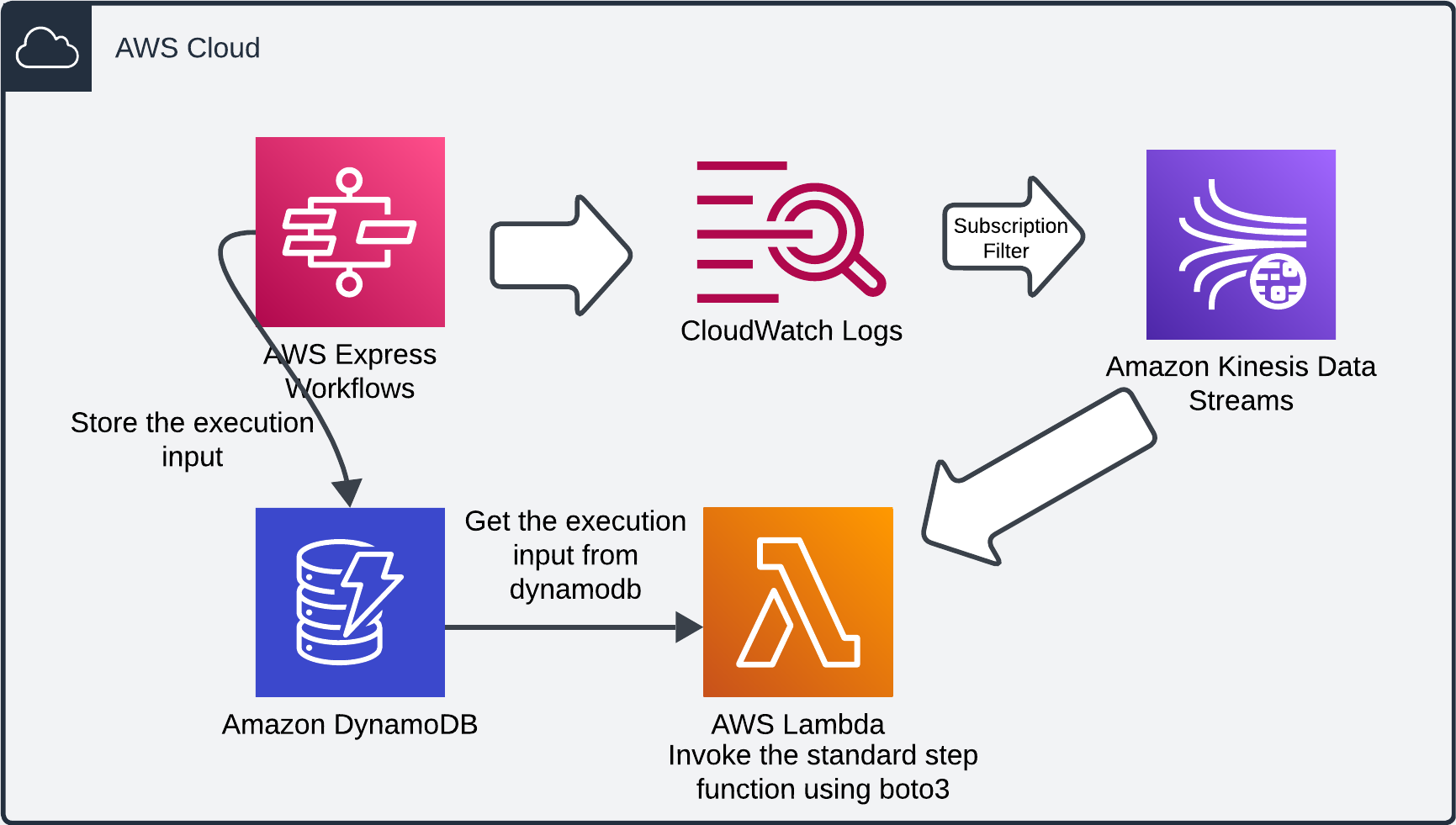

I’ve illustrated the architecture in the diagram above, which should be quite self explanatory.

AWS Express workflow – Execution starts from here and store the logs to cloudwatch and input json to dynamoDB.

Cloudwatch log – In the CloudWatch Subscription Filter tab, we add a JSON filter to capture execution timeout logs and stream them to the Kinesis Data Stream. It looks something like this –

{$.type = ExecutionTimedOut}

Kinesis data stream – When data came to kinesis – its trigger the lambda function to process the log. IN log we can get only execution id of the file from express workflow.

Lambda function – Using the execution ID, we query DynamoDB to retrieve the input JSON stored during the execution. We then use the AWS boto3 API to invoke a standard Step Function, replaying the execution with the same input JSON to process the file.

DynamoDB – We store execution id along with input json of the execution.

If you have any questions or need more details on this topic, feel free to let me know in the comments.

Thank you

Leave a Reply